Deciding how to help

Todd M. Gureckis · · 6 minutes to readHelping behavior is fundamental aspect of human behavior and society at large. We give strangers directions when they are lost, we pick things up for people when they drop them, and we help people lift something too heavy to pick up alone. This makes it a fascinating area to study in cognitive science.

What I find interesting about helping behavior is the sense that it is quite unclear exactly how to help when you aren't told exactly what to do.

If I'm picking something heavy up and I ask a friend, "Can you help me lift this?" I'm telling this person exactly what I want them to do to help me. In contrast, there are many situations where there are several complex social inferences that happen before you can help. For example, consider the classic example from Warneken & Tomasello (2006) of the adult trying unsuccessfully to put some books into the cabinet. Please watch if you haven't seen this before:

What is going on in the mind of this helpful little kid? Here's a proposal of perhaps a non-exhaustive list of inferences it seems like [1] this child had to go through:

- Recognizing at the adult is in need of help.

- Determining that the child could provide the necessary help.

- Deciding that noone else could provide the help (like the other adult in the room).

- Inferring what the goal of the adult likely is.

- Deciding what actions to take to provide the help to achieve the goal.

This last step - deciding what to do to help - is particularly complicated. While it seems intuitive that the child could help by opening the cabinet there are several other possibilities as well. For example the child could offer to take one or more of the books away from the adult to free their hands. The child could give advice like suggesting putting the books down first. The child could go and get another adult to help, etc...

Some of these are likely better options that others in terms of several features we might think about as "costs." For example, maybe it is less costly just to open the cabinet than to try to offer to hold some of the books, particularly for a small child who lacks substantial book-holding strength.

Whatever the sum total of forces are play here, the important thing from my perspective is the way this complex inference seems to unfold very quickly and effortless for this young child.

If you doubt it is amazing, here's a challenge: design an artificial intelligence agent who can genuinely determine how to help people at the level of this 18-month-old child. We might have some success at hard coding the solution to this particular helping situation but given how varied situations are in general it would seem quite difficult to develop a system with this degree of sophistication.

Target paper

Bridgers, S. and Gweon, H. (2018). Means-inference as a Source of Variability in Early Helping. Frontiers in Psychology, 9, 1735.

This brings me to a paper by Bridgers & Gweon (2018), which I'll refer to as B&G. B&G are also really interested in these examples from Tomasello and, like me, are puzzled about the question of how to help. My, slightly uninformed sense, is that much of the work on helping has focused on altrusim and things like the bystander effect which primarily emphasizes the decision to want to help. From the paper:

Wanting to help, however, is not the same as actually helping. For our prosocial motivation to lead to an action, it is also critical to figure out how to help. Depending on what others want, what went wrong, and what we can do to help, we may choose to help others in different ways, or not help at all. (Bridgers & Gweon, 2018)

Of course this question of "how to help" can't be addressed separately from the question of "should I help?" because the should question sort of depend on what the help actually involves (e.g., "What costs will I pay in terms of time or effort to help successfully?").

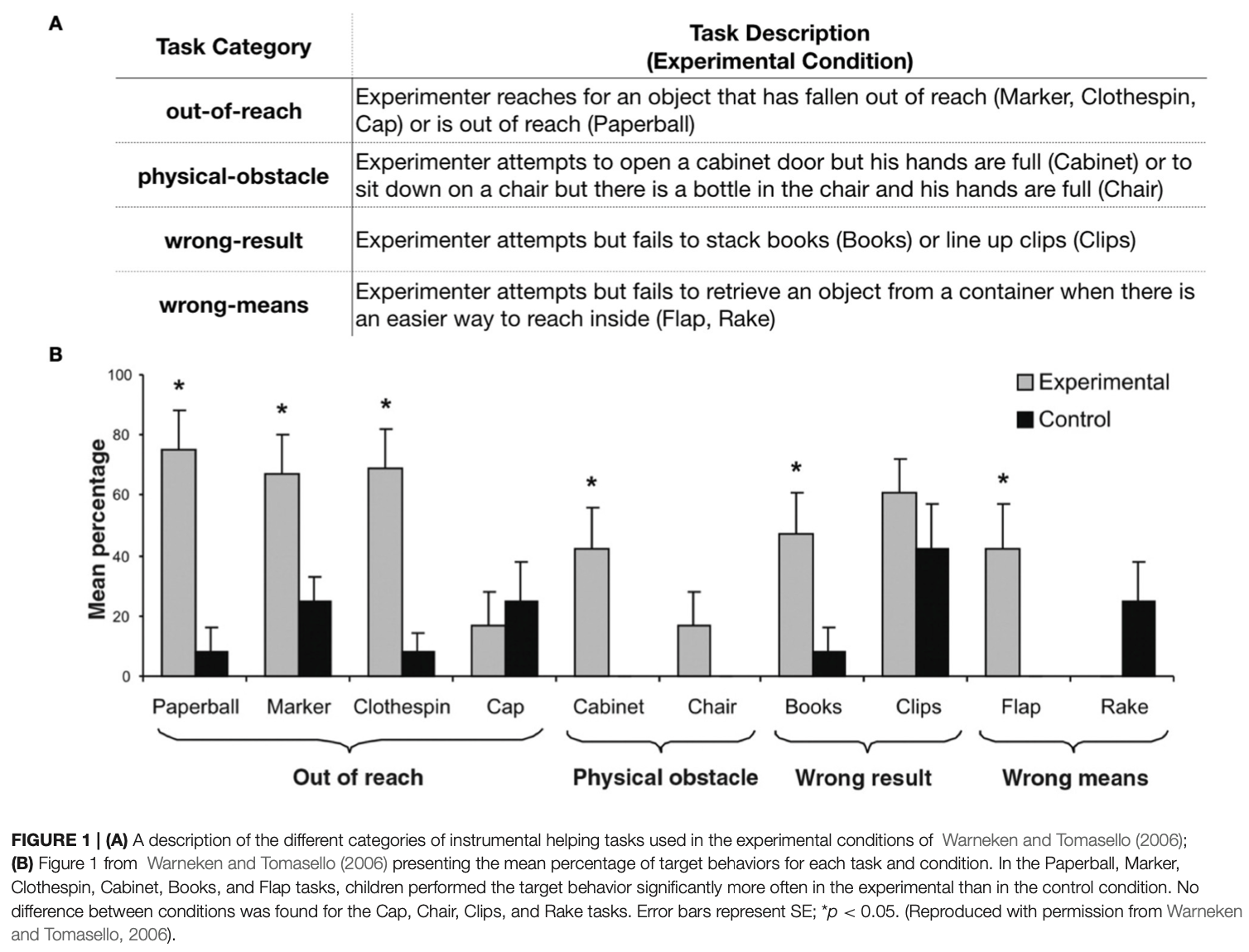

B&G introduce a term means-inference to refer to "identifying the cause of the problem and the appropriate means to resolve the issue" (page 3). The premise of their paper is that a closer look at some of the example scenarios from Warneken & Tomasello (2006). They point out that there is substantial variability in the percentage of times children actually do help in different scenarios.

In the original study there were 10 scenarios where an adult demonstrated some failure to achieve some tasks (by dropping things or running into a physical obstacle like the cabinent from the video). In a few cases, children in the "experimental condition" (meaning one where help was warranted), children didn't help very much (e.g., "Rake", "Chair", and maybe "Cap"). B&G suggest that these examples specifically were ones where the means-inference might have been challenging or complicated. For example, the children might not have had experience with the object that would remove the obstacle (e.g., they didn't know how to use a rake to help reach something, etc...).

While I find this idea interesting, things get a little murky for me there because there's several factors including past knowledge, proper perception, an analysis of the current situation, etc... My impulse is to want to identify what the real bottleneck is on performance, but I get these are very rich naturalistic interactions and so multiple factors can be at play. Still, a more formal analysis can often be illuminating I think.

The paper uses the observation about the variability in successful helping by these children to lay out several aspects of means-inference.

Causal analysis: The first is that identifying how to help may require an intuitive causal analysis of the situation. Through this causal analysis (particularly through counter-factual thinking) one can determine what would happen is obstacle X was altered. They suggest young children have this ability based on other studies, but of course at a computational level constructing the causal model for the scenario is itself quite challenging.

Cost-benefit analysis: The second is that helpers need to consider a cost-benefit analysis of each possible means-inference. For example, once you recognize on potential helping action or sequence of actions, one needs to consider how much effort or time it will take to perform that, the odds it will be successful, etc... B&G talk about this as "feasibility" which seems right. I think there's potentially more in that though about considering how the cost the I pay as the helper lowers the cost or benefits the person I am helping (the helpee). It raises questions in computational terms about something we might call "value alignment." Expending my cost to lower your cost requires making some informed decision about the things you really value.

Helping in other ways: Another issue that I had not considered is the fact that some analysis of a helping situation might result in something other than help. For example, a helper might design to provide comfort or to provide information (teach) instead of help. I think I did consider the teaching element in my intuitive consideration of the cabinet case where it makes sense that (maybe slightly older than 18-month-olds) might tell the adult "put the books down first!").

Ultimately, B&G propose that helping is a complex form of decision-making:

... the production and form of prosocial behaviors are more than responses to cues or triggers; they are the output of a sophisticated decision-making process about the most effective way to help. (Bridgers & Gweon, 2018)

The paper ends with a call to arms for computational accounts of helping which might help clarify some of the issues here, particularly in interpreting past development claims. Since I'm not as personally invested in the developmental arguments I'm a bit less sure that a computational analysis of these problems will change anyone's minds. However, I do think that this idea of "helping as a decision making problem," has a lot of important implications not only for understanding human behavior but also for developing more helpful AI technologies that coexist alongside humans. Maybe I'll write something more about that in the future.

One thought about the computational side is that B&G seem to propose a particular decomposition of helping into several inference tasks. An alternative view I can imagine some people arguing is it is all part of one "unified" decision analysis. For example, a helper might effectively just be planning actions to maximize utility (which includes some other-regarding preferences). The "means" analysis here is less causal or diagnostic problem-solving in the way the B&G describe but more just like planning the best course of action given a model of the world and the minds of other agents. This distinction might not be a strong as it first seems but I do sort of wonder what the most promising approach is.

Overall, Bridgers & Gweon (2018) was an enjoyable read. It lays out some interesting future research directions and I really like the speculative dive into an existing dataset to frame the issue.

References

- Warneken, F., & Tomasello, M. (2006). Altruistic helping in human infants and young chimpanzees. Science, 311, 1301. https://doi.org/0.1126/science.1121448

- Bridgers, S., & Gweon, H. (2018). Means-inference as a Source of Variability in Early Helping. Frontiers in Psychology, 9, 1735.

Footnotes

I want to be a little careful here in assuming that my way of breaking down this problem is the only approach. For instance, it's possible that several simpler things are going on. The adult grunts and so may essentially have said "help me" simplifying step 1. Further, the child is aware of this weird situation of an experiment in a lab and so might have participated in "the game" to some degree. Ecologically, perhaps the situation of helping someone with their hands full has come up quite often in the lives of a typical 18-month-old and so they are simply mimicking a behavior they have seen an adult perform in the past. So don't take me too literally here. ↩︎